Ollama is a great way to run many open Large Language Models (LLMs). You can run Google Gemma 2, Phi 4, Mistral, and Llama 3 on your machine or the cloud with Ollama. You can also host these open LLMs as APIs using Ollama. In this post, you will learn how to host Gemma 2 (2b) with Ollama 0.5.x on Google Cloud Run; let’s get started!

![How to run (any) open LLM with Ollama on Google Cloud Run [Step-by-step] How to run (any) open LLM with Ollama on Google Cloud Run [Step-by-step]](/images/ollama-google-cloud-run/01ollama-google-cloud-run.jpg)

Table of contents #

- Why Google Cloud Run

- Create a GCS bucket

- Deploy Ollama on Google Cloud Run

- Testing Gemma 2 with Ollama on Google Cloud Console

- Conclusion[#conclusion]

Why Google Cloud Run #

Good question; I have written multiple blog posts about Google Cloud Run and also given a couple of talks in the past years, Some great reasons to use Google Cloud Run to host open LLMs with serverless containers are:

- The resources (like CPU, memory, and even GPU) are allocated in a serverless way. Meaning you only pay for the time you use it.

- You don’t need to send data out of your VPC, putting security first

- More cost control without counting tokens, as the LLMs are self-hosted; you can define how the resources are allocated rather than just counting the number of tokens sent and received.

Now that that's out of the way let’s access the Google Cloud Console and deploy Gemma 2 (2b—2 billion parameters) on Google Cloud Run.

Create a GCS bucket #

First, you will need an existing Project on Google Cloud, or you can create a new project. For this tutorial, we will assume that you have a new(ish) project. Since the project has already been selected, you will create a new Google Cloud Storage (GCS) bucket. You are creating a GCS bucket to store the files needed for the open LLM, which is Gemma2 2b in the case of this guide.

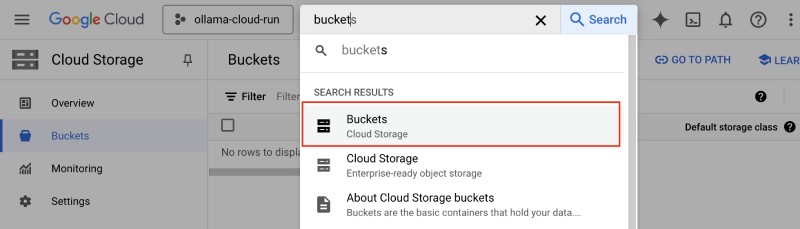

To create a new bucket, search for bucket on the search bar:

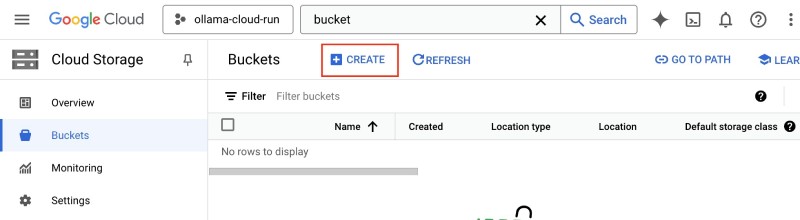

Then select the Buckets option, as shown above. Then click the + Create option on the “Buckets” page:

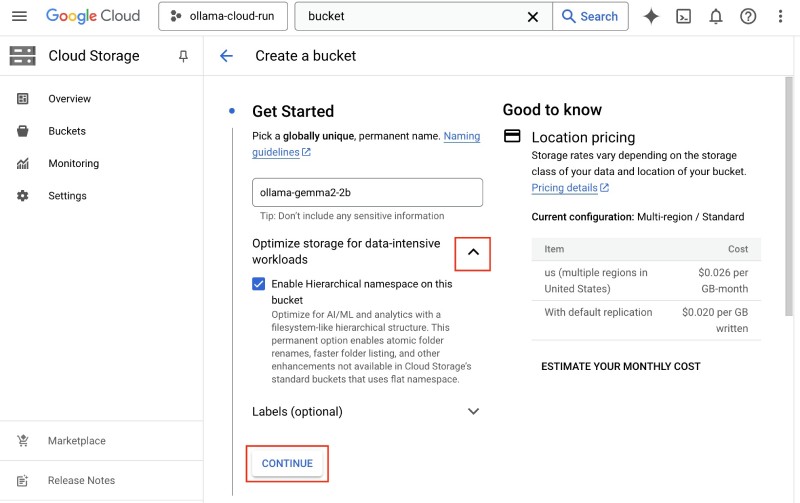

After that, name the bucket something unique, like ollama-gemma2-2b-xyz.. All buckets across GCP have unique names, so you might need a suffix. Then click the down arrow beside Optimize storage for data-intensive workloads and check the Enable Hierarchical namespace on this bucket checkbox as shown below:

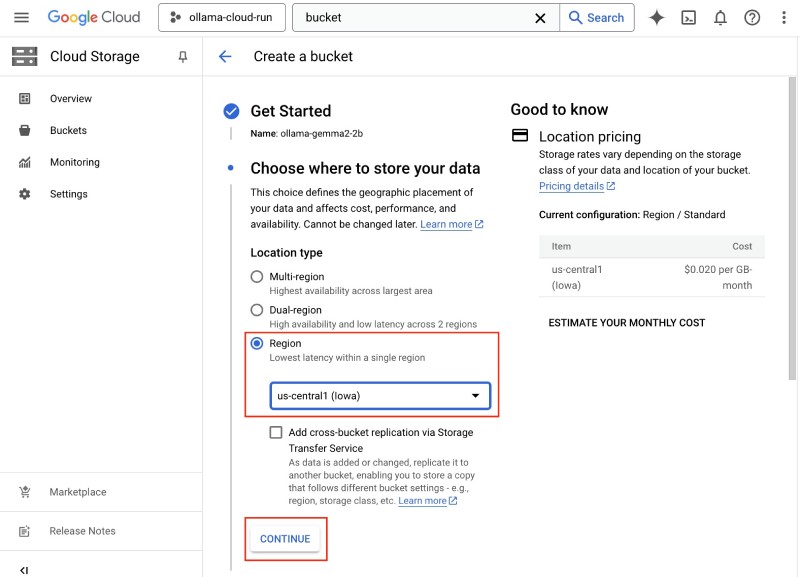

This will help optimize the LLM access later. After that, select the bucket to be a single region and the Region as us-central1 (Iowa) as follows:

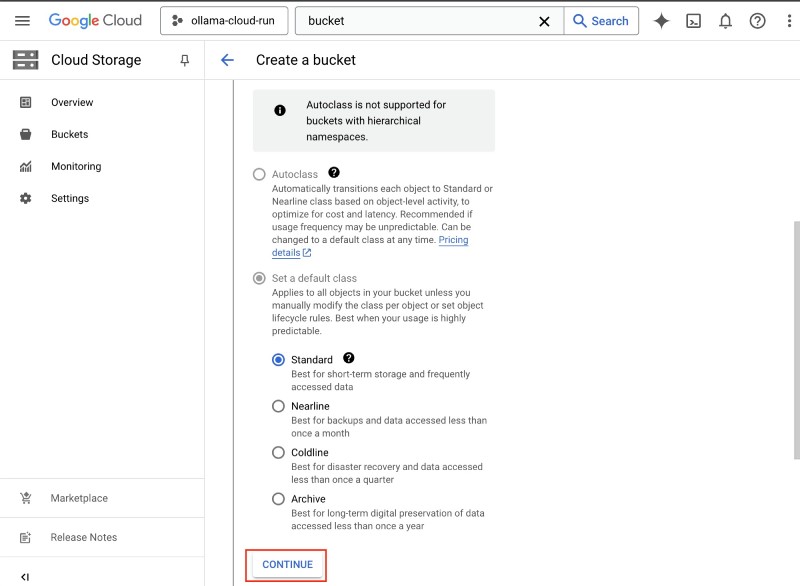

Then click Continue. Next, keep the Storage Class as Standard and then click Continue:

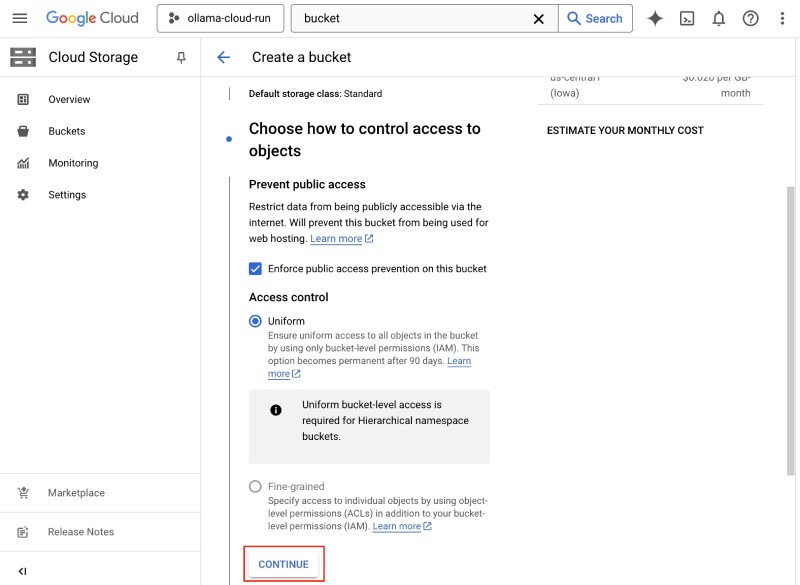

After that, let the access control be on the bucket level (not Fine-grained ) and click Continue:

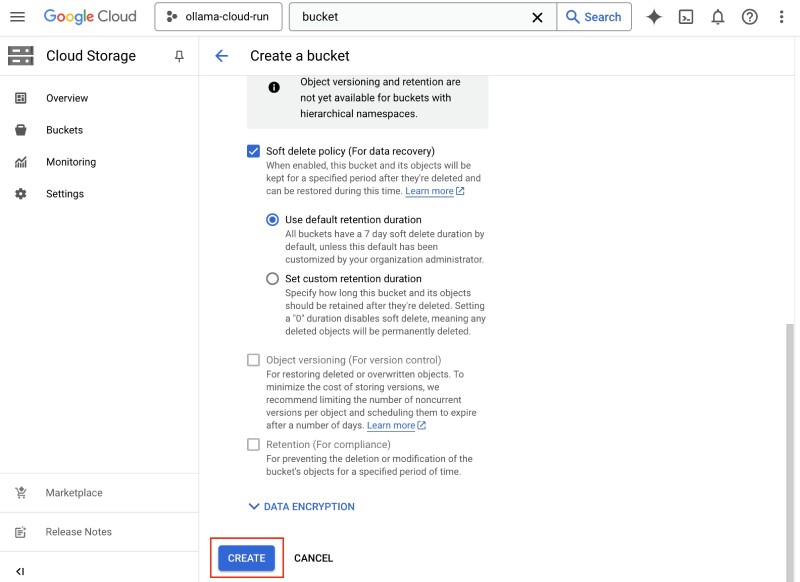

Then, keep the data protection policy as shown below, and click Create to create the bucket.

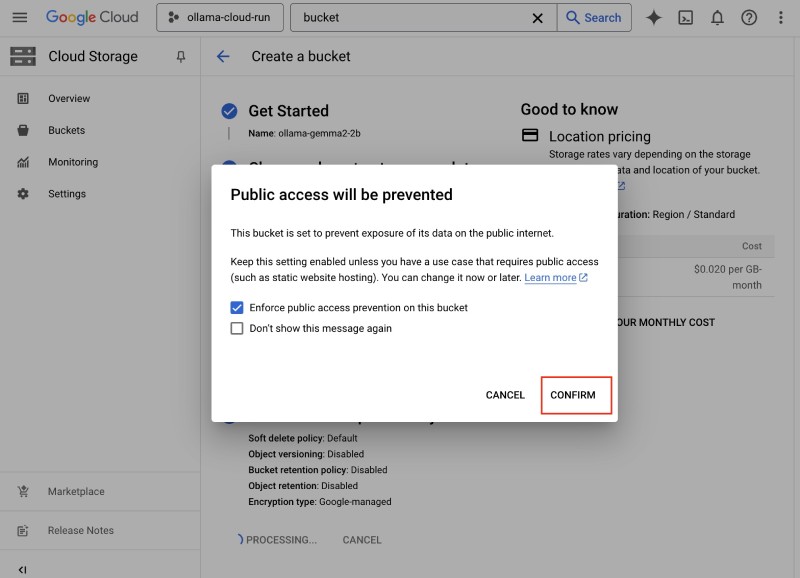

Next, it will ask you to confirm the access as below:

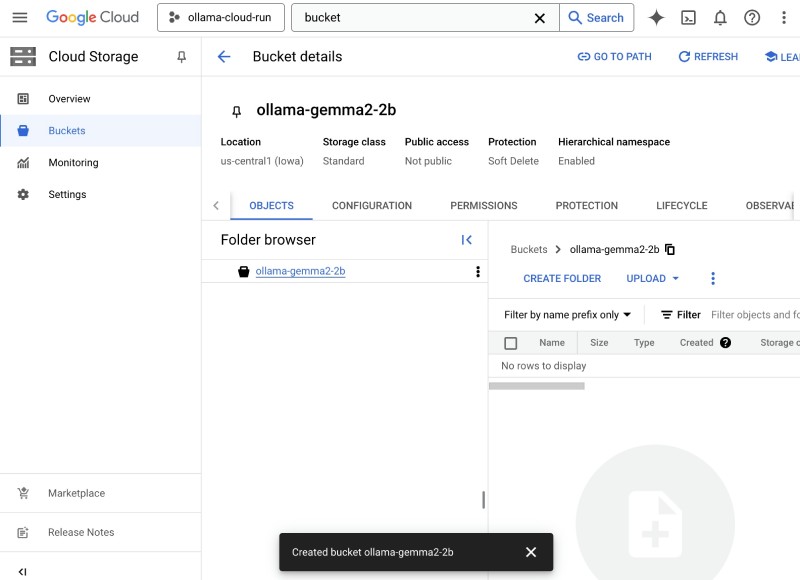

Click Confirm. It will take some time, but the bucket will be created, which will look like the below:

After the bucket is created, the next task is to deploy Ollama on Google Cloud Run.

Deploy Ollama on Google Cloud Run #

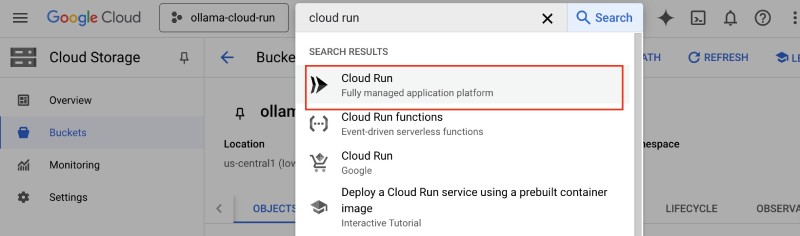

To deploy Ollama on Google Cloud Run, search for cloud run on the search bar:

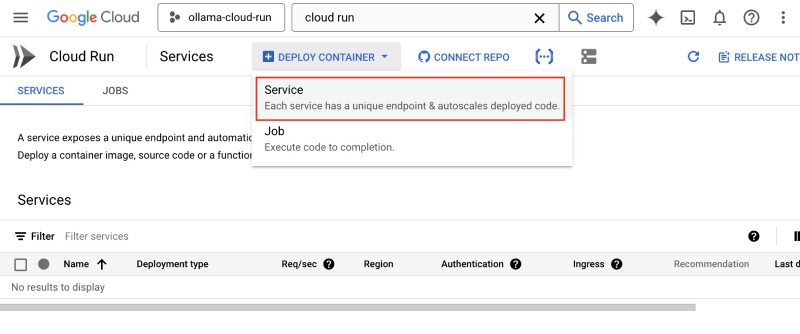

Then click Cloud Run to go to the Cloud Run page. On that page, click on Deploy Container and then click on Service as shown below:

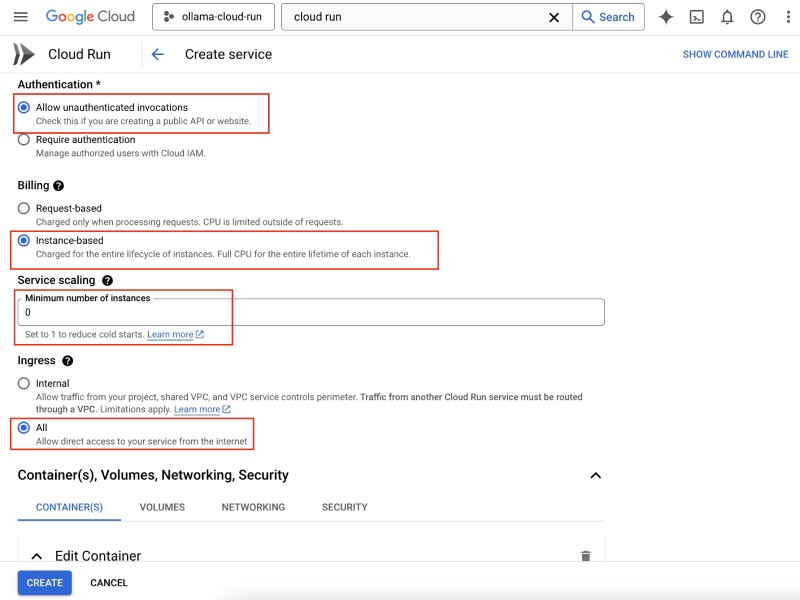

You will do all the important configurations on this page, so be careful. You will need to fill up the form as follows:

- In the

Container image URLtype inollama/ollama:0.5.7- at the time of writing,0.5.7is the latest release and available as an image on DockerHub - In the service name, type in

ollama-gcs - Make sure the region is the same as the bucket, which is

us-central1 - For now, select

Allow unauthenticated invocations. This will make it accessible to anyone on the web, but we are doing it for the sake of this demo. In a real-life scenario, you would put it behind authentication.

Till now, the form will look like the below:

Then, for billing select Instance-based and keep the Minimum number of instances to 0. This makes it serverless. When no requests are coming in, no instances will be up and running, saving you money. After that, select the Ingerss to be All so that it allows traffic from the internet. At this point, your form will look as follows:

Next, you will link the GCS bucket as a Cloud Run volume.

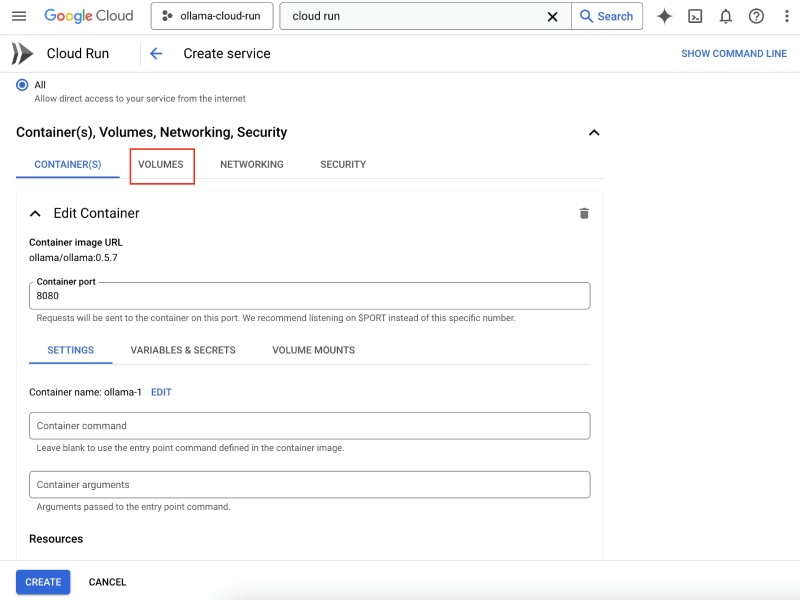

Wire up GSC bucket as a Cloud Run Volume #

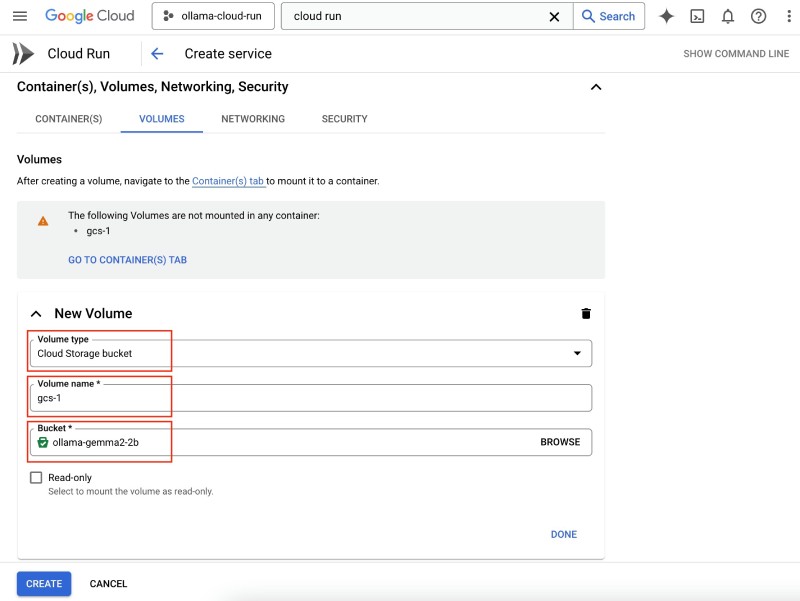

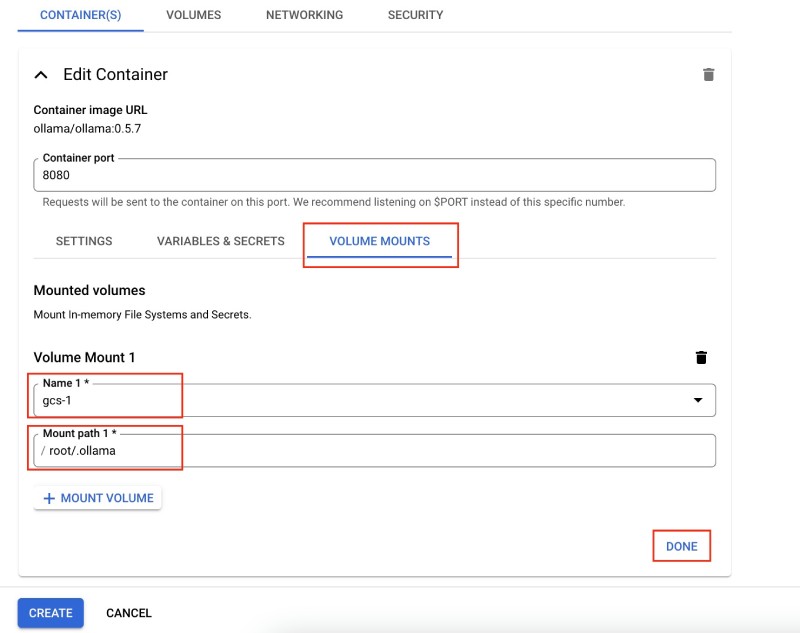

This is an important part where you will link up the Google Cloud Storage (GCS) bucket created in the previous part as a volume for Google Cloud Run Containers. Click the volumes tab on the Container(s), Volumes, Networking, Security part:

Then click Add Volume and select the Volume type as Cloud Storage Bucket. Let the name be gcs-1, and then for the Bucket click Browse and select the bucket you created in the previous step, which will be named something like olllama-gemma2-2b-xyz. Then click Select, at this point, the form will look like the below:

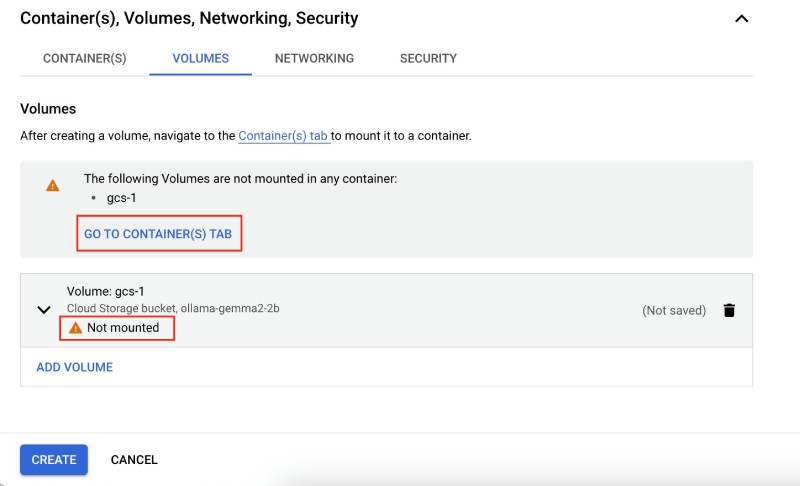

Leave the Read-only checkbox unchecked, as the Cloud Run instances will write files to this bucket. Then click Done. It will say the bucket is Not mounted , which is fine.

After that, click the Go to container(s) tab or the Container(s) tab; on this tab, click the Volume mounts sub-tab, then click Mount Volume. Next, select the Name-1 as gcs-1 and on the Mount path 1 type in /root/.ollama (don’t miss the . in front of ollama); this is where Ollama stores its models. So when the models are pulled (downloaded), they will be saved in this volume, which will also be saved in the bucket. It can be used in other instances as it is in the bucket.

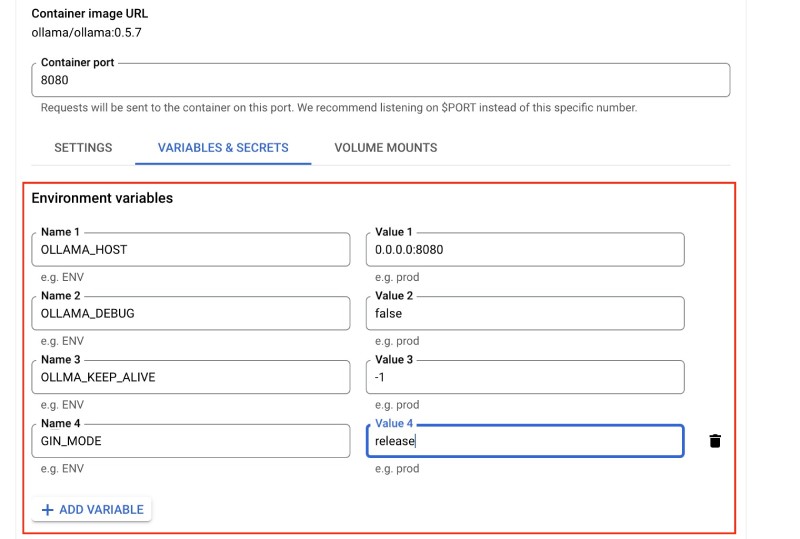

Then click Done. You will set some environment variables for the container next. Click Container: ollama-1 under the Containers tab to do this. Then click the Variables and Secrets sub-tab; after that, click Add Variable, and fill up the following in Name 1 and Value 1:

OLLAMA_HOSTwith value0.0.0.0:8080– this will make Ollama run on port 8080, not the default port of11434

Similarly, add three more variables using the Add Variable button and fill up the following values:

OLLAMA_DEBUGwith valuefalse– this is self-explanatoryOLLAMA_KEEP_ALIVEwith value-1– it keeps the model weight on the GPU (if GPU is used)GIN_MODEwith valuerelease– is to remove any Go Gin debug-related message. Ollama uses Gin under the hood,

Your Variables and Secrets section will look like the below when you are done:

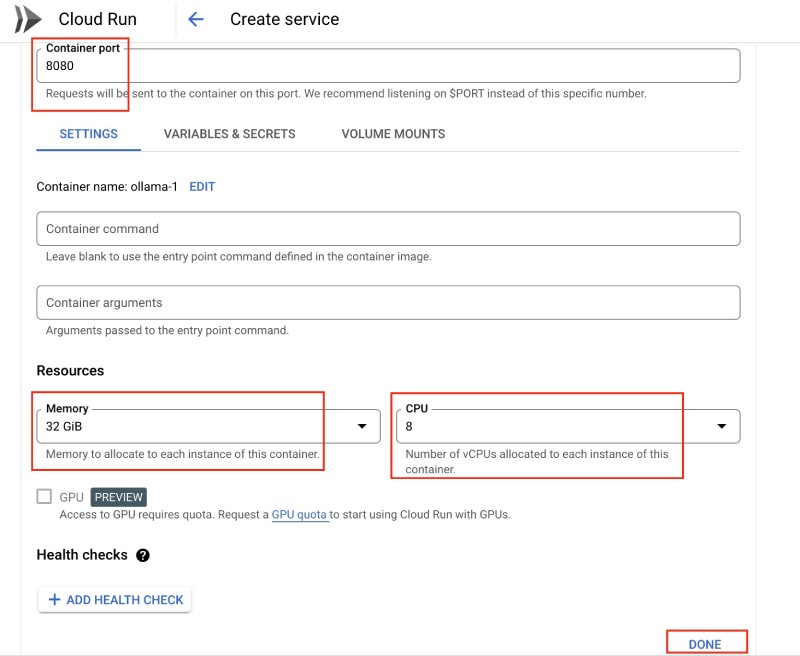

After that, click the Settings tab and set the Memory to 32 GiB and the CPU to 8. You can request GPU access for your project by clicking the GPU quota link and filling out a form. Gemma 2 on Ollama will run (a bit slower, though) without the GPU.

Your Setting section will look like the above when you are done editing it; after that, you can click Done. If you cannot allocate 32 GB of memory and 8 CPUs it might be because your account is new; you can reqeust a quota increase. Even with 512 MB of memory and 1 CPU, which you should have without a quota increase, you can run the smollm2:135m at 135 million parameters; the model is 271 MB, which will fit in the 512 MB allocated memory.

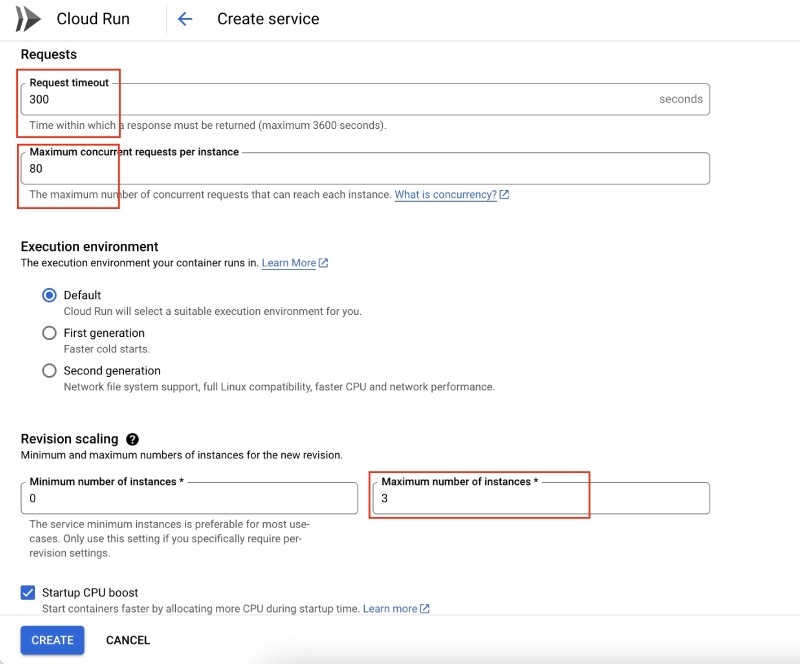

Then, move on to the Requests section. Here, you can set the Request timeout to 300 seconds (5 minutes), the default value, and keep theMaximum concurrent requests per instance at 80. Keep the Minimum number of instances as 0; the only value you should change will be Maximum number of instances; keep it at 3 or 4 maximum. If someone attacks your service, it should timeout or send back a server error, then scale a lot, costing you loads of money. Your Cloud Run service creation form will look like the below:

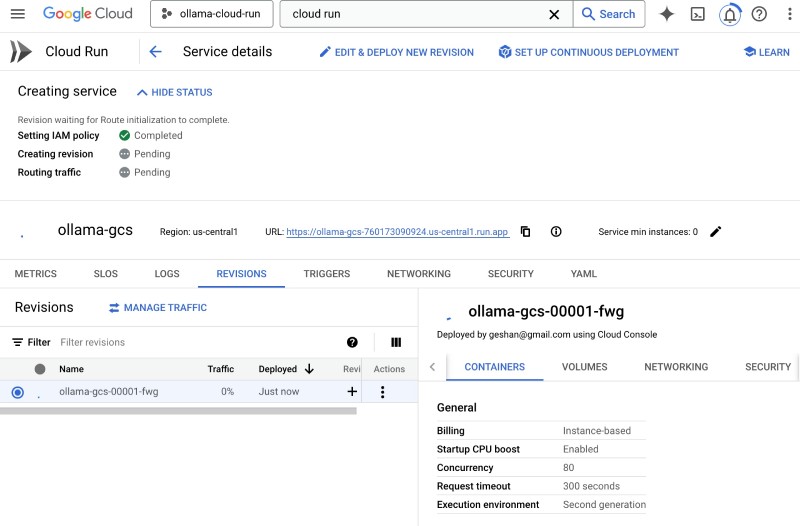

Then click Create and wait for some time as the Ollama image is 1.5 GB, it will take a bit to start. It will look like the following when it is deploying:

It will look like the below after it is deployed successfully:

Click the service URL to see if it is working:

It will show Ollama is running as above if everything went fine. At this point, Ollama has no models to run any inference. So, in the next section, you will pull in and test Gemma 2:2b with Ollama using the Google Cloud Console. Gemma 2 will be the first model for this instance of Ollama.

Testing Gemma 2 with Ollama on Google Cloud Console #

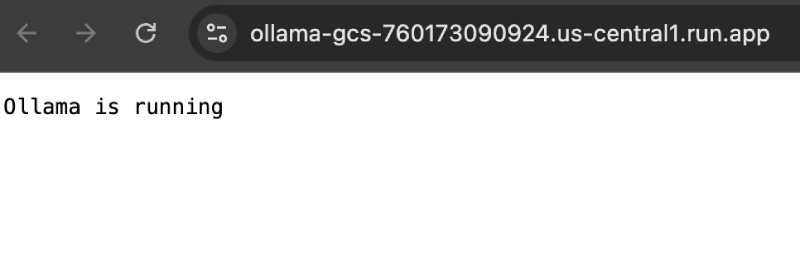

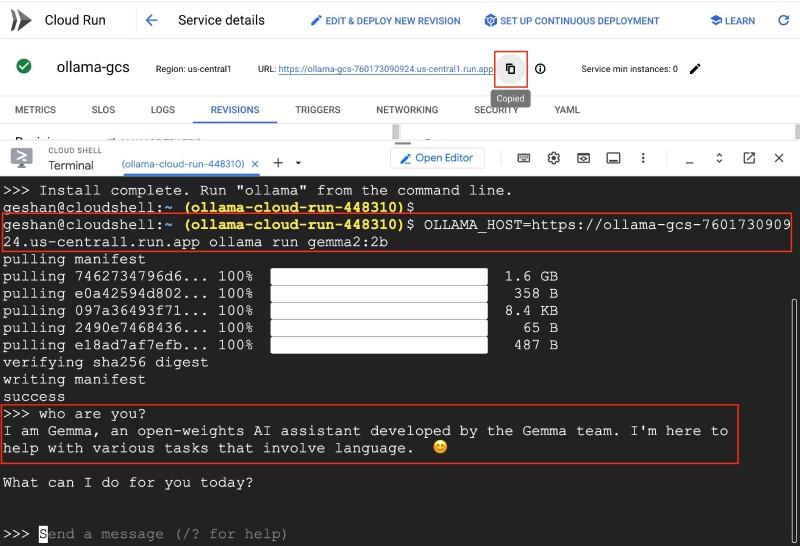

To test Gemma2 (or any other model that can run on Ollama), go back to the Google Cloud Console on the Cloud Run service page and click the Cloud Shell button (or hit G and then S on your keyboard). This will open the Google Cloud Shell terminal.

On the terminal, type curl -fsSL https://ollama.com/install.sh | sh to install Ollama; it has been taken from the Ollama Linux installation page. Let it execute, and it will show an output like the one below:

Then, copy the URL of your service, which will be something like https://ollama-gcs-<some-number-here>.us-central1.run.app. You can use the copy icon beside the URL. After that, execute the following command on your terminal:

OLLAMA_HOST=<copied-url> ollama run gemma2:2bIt will download (pull) Gemma 2:2b and save it in the GCS bucket (a linked volume), and then you can chat with Gemma 2:2b. You can ask who are you and Gemma will reply:

You can download/pull any other model Ollama supports and start using it. For example, you can download llama3.3:70b by Meta, phi4:14b by Microsoft, deepseek-r1:8b by DeepSeek (which is getting very popular), or even smollam2:135m, which is only 271 MB in size compared to other models, which are GBs in size.

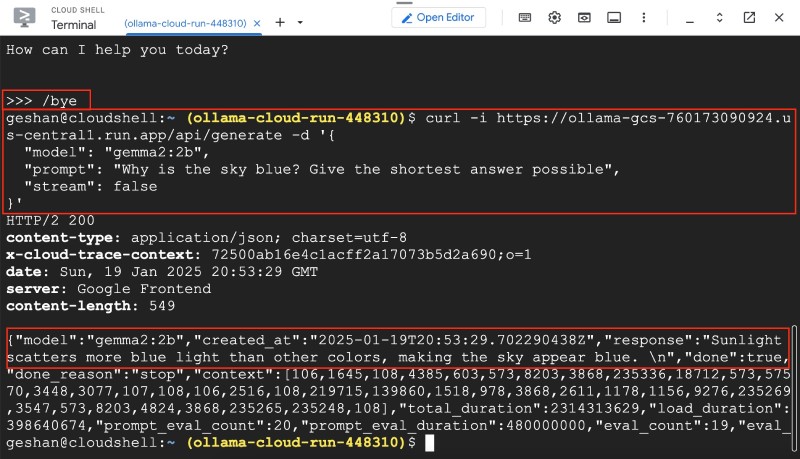

You can type /bye to get out of the ollama CLI. Now, as Gemma 2:2b is downloaded, you can also send a cURL command to test it out like the one below:

curl -i https://ollama-gcs-<some-number-here>.us-central1.run.app/api/generate -d '{

"model": "gemma2:2b",

"prompt": "Why is the sky blue? Give the shortest answer possible",

"stream": false

}'It will give an output as follows:

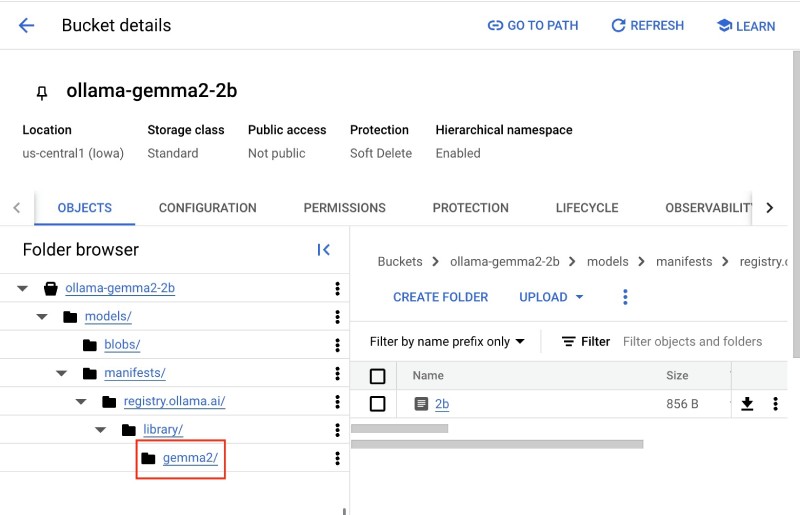

If you go in the bucket and look at its contents, you will find Gemma 2 there:

Google Cloud Run makes it easy to run any LLM on Ollama. You can run Phi 4, Llama 3, or any other model; you must pull it and run your command or POST with curl. You can also use libraries like LiteLLM to use the model in your apps using Ollama’s APIs. Please explore Ollama more on your own. You can also use Open WebUI to have a GUI on top of Oallma running Gemma 2 LLM.

Conclusion #

It is easy to run any LLM with Ollama on Google Cloud Run. Be careful of the access as Ollama APIs allow pulling models and even deleting them. With Cloud Run, you will only pay for the resources when you use it, which makes it ideal for experiments. I hope you learned something new from this post and keep experimenting.